Megatron-LM

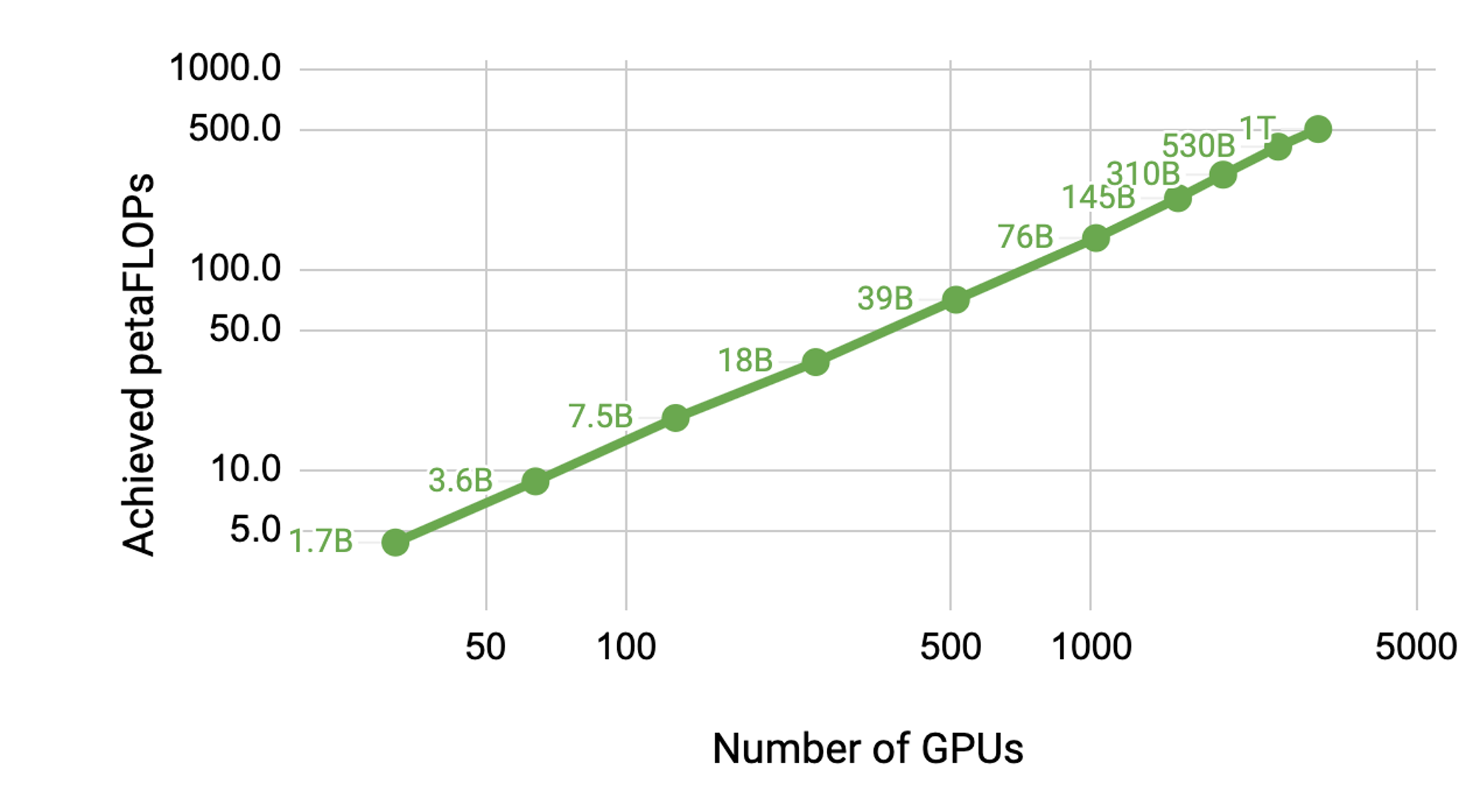

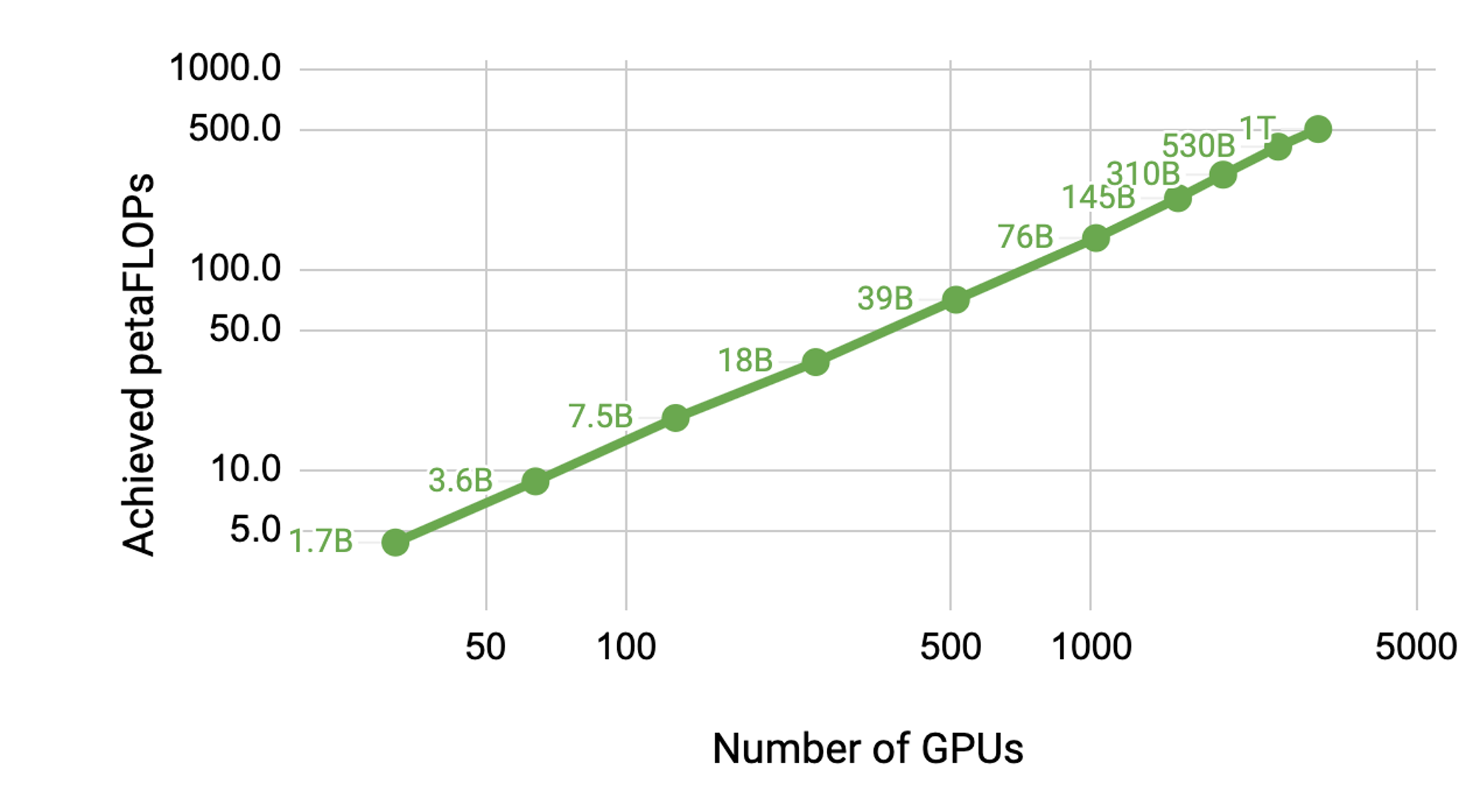

Next we’ll run Megatron-LM (paper), a powerful framework from NVIDIA for training multi-billion parameter models.

We’ll be using the pretrain_gpt_distributed.py functionality to train a 7.5B parameter model similar to GPT.

Next we’ll run Megatron-LM (paper), a powerful framework from NVIDIA for training multi-billion parameter models.

We’ll be using the pretrain_gpt_distributed.py functionality to train a 7.5B parameter model similar to GPT.